Introduction

Artificial intelligence (AI) and machine learning (ML) have evolved into game-changing technologies with limitless applications ranging from natural language processing to the automobile sector. These applications need a significant amount of computing power, and memory is an often neglected resource. Fast memory is crucial for AI and ML activities, and GDDR6 memory has established itself as a prominent participant in this industry where high speed and computing power are necessary. The following article will investigate the usage of GDDR6 in AI and ML applications, as well as current IP trends in this crucial subject.

Architecture of GDDR6

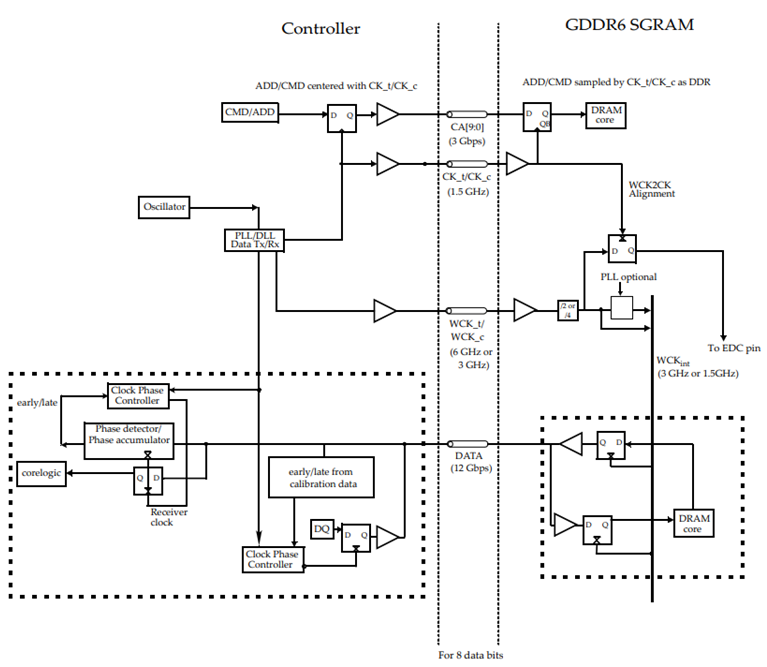

High-speed dynamic random-access memory with high bandwidth requirements is the GDDR6 DRAM. The high-speed interface of the GDDR6 SGRAM is designed for point-to-point communications to a host controller. To accomplish high-speed operation, GDDR6 employs a 16n prefetch architecture and a DDR or QDR interface. The architecture of the technology has two 16-bit wide, completely independent channels.

Figure 1 Block diagram [Source]

The Role of GDDR6 in AI and ML

For AI and ML processes, including the training and inference phases, large-scale data processing is necessary. Avoid AI GPUs (Graphics Processing Units) have evolved into the workhorses of AI and ML systems to make sense of this data. The parallel processing capabilities of GPUs are outstanding, which is crucial for addressing the computational demands of workloads for AI and ML.

Data is a crucial piece of information, high-speed memory is needed to store and retrieve massive volumes of data, and GPU performance depends on data analysis. Since the GDDR5 and GDDR5X chips from earlier generations couldn’t handle data transmission speeds more than 12 Gbps/pin, these applications demand faster memory. Here, GDDR6 memory plays a crucial function. AI and ML performance gains require memory to be maintained, hence High Bandwidth Memory (HBM) and GDDR6 offer best-in-class performance in this situation. The Rambus GDDR6 memory subsystem is designed for performance and power efficiency and was created to meet the high-bandwidth, low-latency requirements of AI and ML. The demand for HBM DRAM has significantly increased for gaming consoles and graphics cards as a result of recent developments in artificial intelligence, virtual reality, deep learning, self-driving cars, etc.

Micron’s GDDR6 Memory

Micron’s industry-leading technology enables the next generation faster, smarter global infrastructures, facilitating artificial intelligence (AI), machine learning, and generative AI for gaming. Micron has launched GDDR6X with NVIDIA GeForce® RTX™ 3090 and GeForce® RTX™ 3080 GPUs due to its high-performance computing, higher frame rates, and increased memory bandwidth.

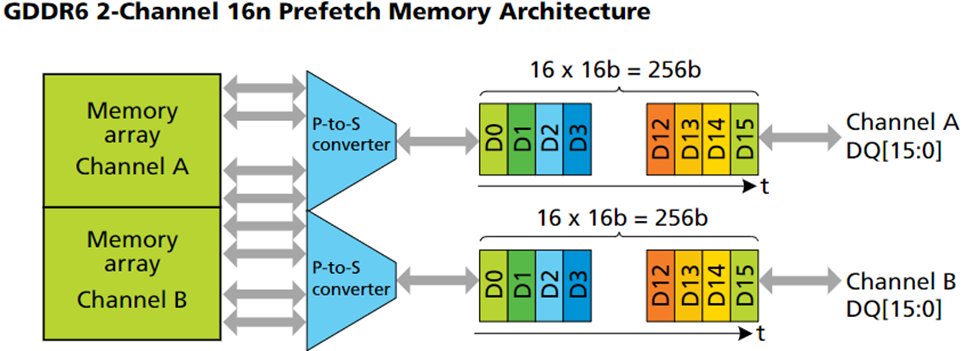

Micron GDDR6 SGRAMs were designed to work with a 1.35V power supply, making them ideal for graphics cards. The memory controller receives a 32-bit wide data interface from GDDR6 devices. GDDR6 employs two channels that are completely independent of one another. A write or read memory access is 256 bits or 32 bytes wide for each channel. Each 256-bit data packet is converted by a parallel-to-serial converter into 16×16-bit data words that are consecutively broadcast via the 16-bit data bus. Originally designed for graphics processing, GDDR6 is a high-performance memory solution that delivers faster data packet processing. GDDR6 supports an IEEE1149.1-2013 compliant boundary scan. Boundary scan allows testing of interconnect on the PCB during manufacturing using state-of-the-art automatic test pattern generation (ATPG) tools.

Figure 2 Source

Rambus GDDR6 Memory Interface Subsystem

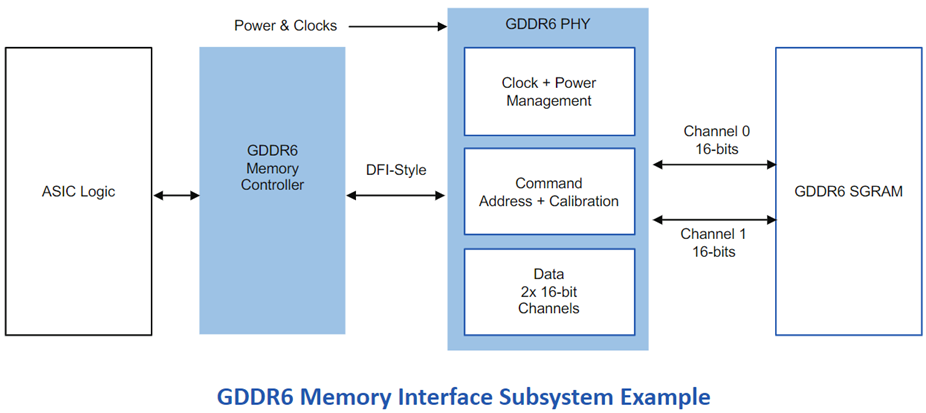

The JEDEC GDDR6 JESD250C standard is fully supported by the Rambus GDDR6 interface. The Rambus GDDR6 memory interface subsystem fulfills the high-bandwidth, low-latency needs of AI/ML inference and is built for performance and power economy. It includes a PHY and a digital controller that gives users a full GDDR6 memory subsystem. It provides an industry-leading 24 Gb/s per pin and enables two channels with a combined data width of 32 bits. Each channel supports 16 bits. The Rambus GDDR6 interface has a bandwidth of 96GB/s at 24 Gb/s per pin.

Figure 3 [Source]

Application of GDDR6 memory in AI/ML applications

A large variety of AI/ML applications from many industries employ GDDR6 memory. Here are some actual instances of AI/ML applications that make use of GDDR6 memory:

- FPGA-based AI applications

Micron in their recent new release focused on the development of High-Performance FPGAs based GDDR6 memory for AI applications built on TSMC 7nm process technology with FPGA from Achronix.

2. GDDR6 memory is ideal for AI/ML inference at the edge where fast storage is essential. It offers better memory bandwidth, system speed, and low latency performance, which makes the system to be used for real-time computing of large amounts of data.

3. Advanced driver assistance systems (ADAS)

ADAS employs GDDR6 memory in visual recognition for processing large amounts of visual data, in multiple sensors for tracking and detection, and for real-time decision-making where a large amount of neutral network-based data is analyzed to reduce accidents and for passenger safety.

4. Cloud Gaming

To provide a smooth gaming experience, cloud gaming uses GDDR6 memory, which is fast memory.

5. Healthcare and Medicine:

GDDR6 is used in faster analysis of medical data in the medical industry implemented with AI algorithms for diagnosis and treatment.

IP Trends in GDDR6 use in machine learning and Artificial intelligence

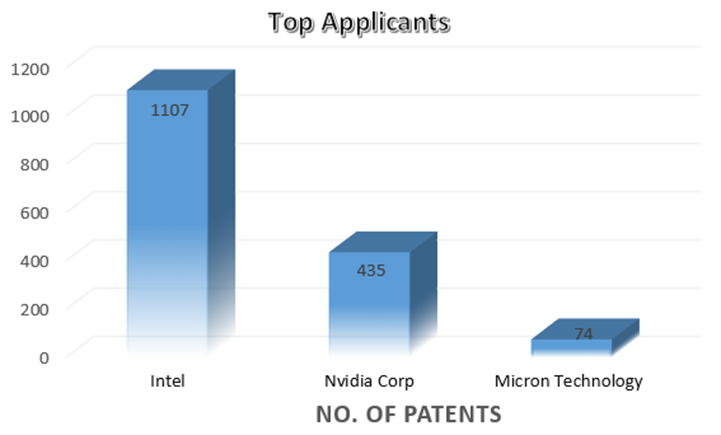

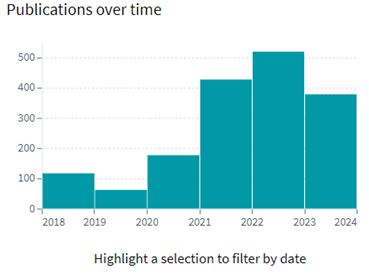

As the importance of high-speed with low latency memory is increasing, there is a significant growth in the patent filing trends witnessed across the globe. The Highest number of patents granted was in 2022 with 212 patents and the highest number of patent applications filed was ~408 in 2022.

INTEL is a dominant player in the market with ~1107 patent families. So far, it has 2.5 times more patent families than NVIDIA Corp., which comes second with 435 patent families. Micron Technology is the third-largest patent holder in the domain.

Other key players in the domain are SK Hynix, Samsung, and AMD.

[Source: https://www.lens.org/lens/search/patent/analysis?q=(GDDR6%20memory%20use)]

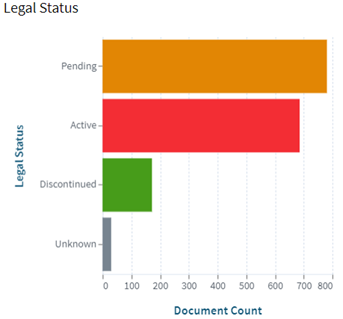

Following are the trends of publication and their legal status over time:

[Source: https://www.lens.org/lens/search/patent/analysis?q=(GDDR6%20memory%20use)]

Conclusion

High-speed memory is a hero who goes unnoticed in the quick-paced world of AI and ML, where every millisecond matters. It has stepped up to the plate, providing great bandwidth, low latency, and enormous capacity, making GDDR6 memory an essential part of AI and ML systems. The IP trends for GDDR6 technology indicate continued attempts to enhance memory solutions for these cutting-edge technologies as demand for AI and ML capabilities rises. These developments bode well for future AI and ML developments, which should become much more amazing.